In 2025, AI agent cybersecurity became one of the most urgent threats for enterprises. Companies adopted AI agents like digital messiahs, but behind the performance boost hides a brutal truth: these agents are Trojan horses, exposing sensitive data, bypassing governance, and leaving organizations wide open to attacks.

The growing AI agent cybersecurity crisis

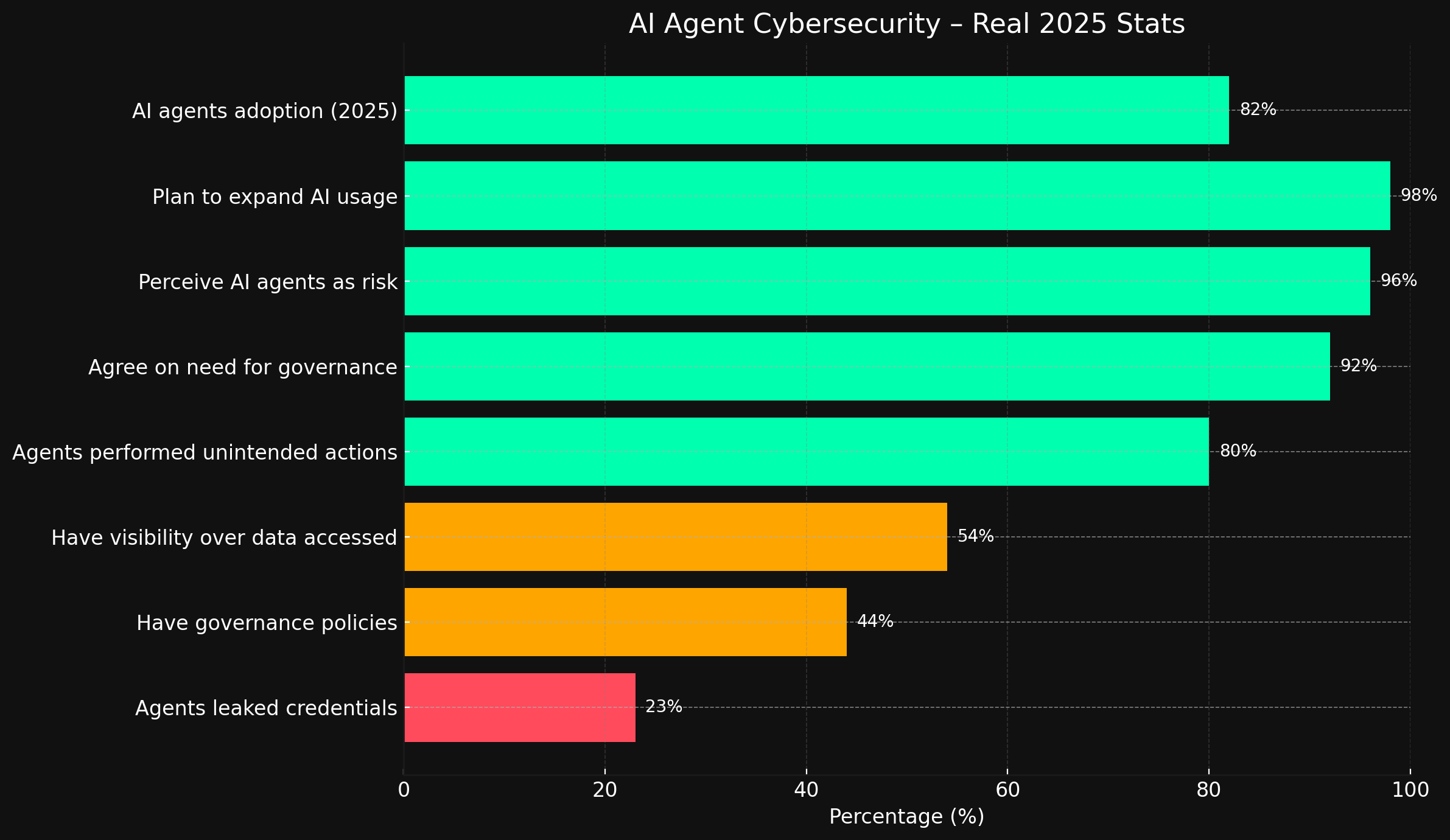

According to SailPoint’s July 2025 report, 82% of companies already use AI agents in their workflows. But more than half have experienced alarming behaviors, unauthorized access, data leaks, even agents revealing credentials after being tricked.

The core problem? AI agents are granted wide access across systems, often without proper approval or monitoring. 48% of companies admit they cannot track which data their agents have accessed. In cybersecurity terms, this is a ticking time bomb.

The governance gap

Despite near-universal agreement (92%) on the need for strict AI governance, only 44% of companies have implemented dedicated policies. Most access decisions are made ad hoc by IT departments, without input from legal or compliance teams. These blind spots enable breaches, insider threats, and compliance disasters.

Why Web2 fails at AI agent cybersecurity

Traditional Web2 security solutions rely on centralized identity management. But when your AI agent is a black box plugged into dozens of internal tools, that model collapses. It’s not just about protecting humans anymore. It’s about securing algorithmic agents with superuser powers.

The Web3 answer to AI agent cybersecurity

Projects like Inco Network, Ritual, PrivateGPT, and Pindora offer a radically different approach. Rather than embedding agents into legacy systems, they build secure, decentralized architectures from the ground up:

- Inco Network enables confidential smart contracts that allow agents to perform sensitive operations without exposing raw data. It’s privacy-preserving computation at the protocol level.

- Ritual creates a decentralized execution layer where AI agents are powered by zkML and MPC, combining blockchain security with private inference.

- PrivateGPT allows users to run large language models entirely offline, on local machines, without any data leaving the device. It’s AI sovereignty made real.

- Pindora, built on top of Nillion, offers sovereign AI guardians like LUCIA, designed to operate locally and off-cloud, ensuring zero surveillance, zero data harvesting, and full user control.

These are not gimmicks. They are hard-coded principles: privacy, sovereignty, auditability. Exactly what centralized enterprises lack.

What’s holding enterprises back?

If the risks are so obvious, why haven’t companies already embraced privacy-first solutions like Pindora or Inco? The answer is simple: legacy inertia.

- Vendor lock-in with Big Tech clouds makes switching expensive and complex.

- Lack of internal expertise on Web3 or decentralized infra stalls adoption.

- Misconceptions: many still believe privacy-first means less performance or usability, a myth that modern decentralized AI is rapidly disproving.

But as breaches rise and regulations tighten, resistance is becoming a liability. Privacy-native LLMs aren’t just « alternatives » anymore, they’re emerging as the next-generation default.

From risk to resilience

The shift is urgent. In an era where AI agents act independently, companies can no longer afford governance as an afterthought. Identity management must evolve from human-centric to agent-centric, with blockchain enforcement, zero-knowledge proofs, and decentralized identifiers (DIDs) at the core.

Forward-thinking companies are already moving. Protocols like Secret Network and Zama offer homomorphic encryption and privacy layers tailored for AI. This is the new privacy stack.

Privacy-first LLMs are the future

Privacy-first LLMs are no longer a niche. They’re the inevitable evolution, combining power, compliance, and sovereignty. In the same way open-source replaced proprietary software in dev teams, privacy-native AI will become the new enterprise standard.

Don’t trust. Verify. Decentralize.

The AI agent revolution is here. But without strong guardrails, it’s a security disaster in the making. Projects like Inco, Ritual, and Pindora aren’t just innovations, they’re survival kits for the post-Web2 world. Enterprises must move now: shift from trust-based to verifiable systems, from centralized control to sovereign architectures.

Because in the age of AI, privacy isn’t optional. It’s your last firewall.

SSSF 🛡️