The moment you finish reading this sentence, privacy will be quietly sacrificed by millions, as the allure of “free” apps and frictionless logins seduces people into trading away data for digital dopamine. That’s the reality of privacy addiction: a deep behavioral matrix that most of us can’t even see, let alone escape. What if I told you this matrix isn’t just a metaphor, but the silent architecture of our digital age? You are not the exception. Scroll, click, swipe: your data is currency, and you’re giving it away.

The story that follows is not science fiction. It’s a psychological autopsy of how we became so numb to the loss of privacy, why almost no one cares to break free, and what it will really take to reclaim control over our lives in the age of hyperconnected data.

Privacy addiction: understanding the invisible trade

Privacy addiction is not a phrase you’ll find in psychology textbooks, yet it shapes modern digital life more than any diagnosis. People have grown comfortable exchanging privacy for access, social validation, or small conveniences. Even when new privacy scandals break, the outrage lasts less than a news cycle. We return to our feeds and devices, granting permissions with a tap, rarely reading the terms, not because we’re naive, but because it’s easier to comply than to resist.

Why do so many continue this cycle, even as they grow more aware of surveillance, algorithmic profiling, and mass data collection? The answer lies in a powerful blend of behavioral economics and digital design: frictionless experiences are engineered to bypass critical thinking and trigger instant gratification. Each time you sign up with Google, accept cookies, or say “yes” to a location pop-up, you reinforce the matrix’s core premise: privacy is expendable, convenience is king.

The data matrix: how “free” became a trap

Digital platforms promise the world in exchange for your data. The average person has accepted this Faustian bargain without protest, lulled by the illusion that “free” means harmless. But there’s no such thing as free in the digital economy.

The real product isn’t the app, it’s your identity, habits, desires, and behaviors, packaged and sold to advertisers, data brokers, and sometimes even governments.

Few stop to ask: What are the long-term consequences of giving away personal data so casually? Every time you answer an online quiz, enable fitness tracking, or link a payment account to a social network, you add new layers to your digital shadow. This shadow is persistent, analyzed, resold, and used to manipulate your future choices, often in ways you’ll never notice.

Why breaking the cycle of privacy apathy is so hard

Humans are hardwired to prefer immediate rewards over distant risks. Digital designers exploit this bias masterfully, using behavioral nudges to lower our guard and normalize consent. Privacy warnings are long, confusing, and easy to ignore. The real friction is in opting out, not in opting in.

Most people know, at least in theory, that their data is valuable. So why don’t more of us fight back?

The answer is psychological fatigue. The scale and complexity of modern data ecosystems are overwhelming. It’s hard to muster outrage about something so abstract, especially when “everyone else is doing it” and daily life feels unchanged.

Studies show that even after major scandals (like Cambridge Analytica or mass biometric leaks), user habits barely shift. Instead, apathy deepens. This is the dark genius of privacy addiction: it masquerades as rational, even when it’s self-destructive.

Convenience versus privacy: who really wins?

Every major platform justifies data collection by promising personalized experiences and better services. Amazon, Facebook, Google, and TikTok all insist: “We need your data to improve your life.” The truth is less generous. Personalization does deliver benefits, smarter recommendations, faster services, and fewer irrelevant ads, but these perks come with a cost: permanent surveillance, manipulation, and the erosion of boundaries between public and private life.

Some argue that data-driven convenience is the future, and privacy is a relic of the past. Yet this tradeoff is not inevitable. Emerging privacy-first platforms, decentralized services, and new browser tools prove that you can have both utility and confidentiality, if you’re willing to change habits and demand more.

A concrete example ? Banza App ! More ? Visit The Privacy Project, which chronicles efforts to reclaim privacy in a surveillance economy.

Privacy addiction: the psychological chains behind the matrix

Breaking free from privacy addiction requires more than just installing a VPN (check our exclusive deal with NYM) or switching search engines (Brave is 100x better than Chrome, who still uses Google Chrome in 2025?). It demands a fundamental rewiring of habits, attitudes, and collective norms. This is harder than it sounds! But you’re not alone, we’re here to help you every step of the way.

Tech companies have spent decades training users to trust them with everything, location, health, communications, even intimate thoughts. The reward loops are powerful. Likes, notifications, frictionless logins, they all teach you to ignore the cost of giving up privacy, and focus on the next dopamine hit.

Awareness alone is not enough. Just as with other forms of addiction, real change comes only with intervention, behavioral, legal, and technological. This is why movements for digital sobriety, data minimalism, and privacy advocacy are finally gaining ground. Their message: Privacy isn’t about paranoia, it’s about power.

The coming storm: the cost of a privacy-free society

Now, let’s push the lens wider. What does a fully “privacy-free” society look like? Imagine a world where algorithmic prediction replaces personal agency, where your health, emotions, relationships, and even political leanings are not just tracked, but continuously anticipated, categorized, and monetized.

When privacy is reduced to a historical footnote, control shifts quietly from individuals to systems.

- Insurance companies now set your rates using real-time streams of biometric data, and can deny claims based not only on illness but on any behavior deemed “risky” by the algorithm.

- AI-driven surveillance flags dissent or “undesirable” conduct long before it’s visible to the public, quietly building digital profiles that travel with you everywhere.

- Your reputation score, calculated from everything you post, buy, and share, shapes your access to housing, employment, and travel, often before you even realize it’s happening.

- Banks and central bank digital currencies, like the digital euro, monitor every transaction in real time. A single “suspicious” purchase, an unorthodox spending pattern, or even a social media post out of line, and you risk payment delays, blocked accounts, or being quietly locked out of essential financial services

Manipulation now extends far beyond advertising. News, opportunities, and relationships, all of reality, are filtered, ranked, and reordered by invisible algorithms optimized not for your benefit, but for profit and control.

This is not just a dystopian warning, it’s a logical extension of current trends. Once a society normalizes trading privacy for convenience, the slope is steep and slippery. The more you share, the less control you have over how you’re shaped by the invisible hand of data.

The point of no return: why the next decade matters

Here’s the hard truth: every year, as new generations grow up “always connected,” the baseline for privacy drops. What was once shocking, like facial recognition in public spaces or AI listening to your conversations becomes unremarkable, even desirable, in the name of personalization or security.

But there is a point of no return.

When every detail of your life is permanently stored, processed, and cross-referenced, real privacy becomes mathematically impossible. The cost? The loss of autonomy, genuine free will, and the right to redefine yourself outside of data-driven narratives.

If nothing changes, the future may not be a dramatic Orwellian nightmare, it will be quieter, more banal. A world where control and influence flow not through laws or guns, but through code, recommendation engines, and the subtle shaping of what you see and do.

It’s not too late: rebuilding the value of privacy

Urgency is real, but so is hope.

What can change the tide? First, a massive cultural shift: society must recognize privacy not as a nostalgic ideal, but as a form of digital self-defense. This means pushing for regulation, yes, but also demanding transparency, ethical design, and practical privacy defaults.

Second, the responsibility is collective.

- Creators need to stop designing for addiction and start designing for respect.

- Entrepreneurs must prove that ethical business can be profitable, by championing privacy as a unique selling point, not a compliance checkbox.

- Developers can build open-source tools and platforms that put users back in control, setting new standards for consent and security.

- Marketers should lead with value, not manipulation, refusing to prey on users’ behavioral blind spots. (hello Kaito…)

Third, education matters more than ever. Privacy literacy, knowing how your data is used, and why it matters, should be as fundamental as reading or math. Parents, schools, and influencers all have a role to play.

Privacy addiction: mapping the path forward

The future of privacy will be defined by the choices made today. Do you want a world where every purchase, movement, or conversation is tracked and monetized, or one where you can reclaim your agency and decide what’s shared, and when?

The matrix is hard to see from the inside, but the exit is there. Start by demanding clarity from your apps and platforms. Use privacy tools, but more importantly, cultivate a healthy suspicion of any service that insists on knowing everything about you. Remember: Your data is not just information. It’s influence, leverage, and (in the wrong hands) risk.

For those willing to take the first step, change is not only possible ,it’s necessary.

What does privacy addiction mean for you, your family, and your future? How much are you truly willing to trade for convenience, and where would you draw the line? Are you ready to question your own habits, or do you trust the system to shape your reality for you?

If this article gave you a new perspective, share it and spark the conversation that could help others break free from the data matrix…

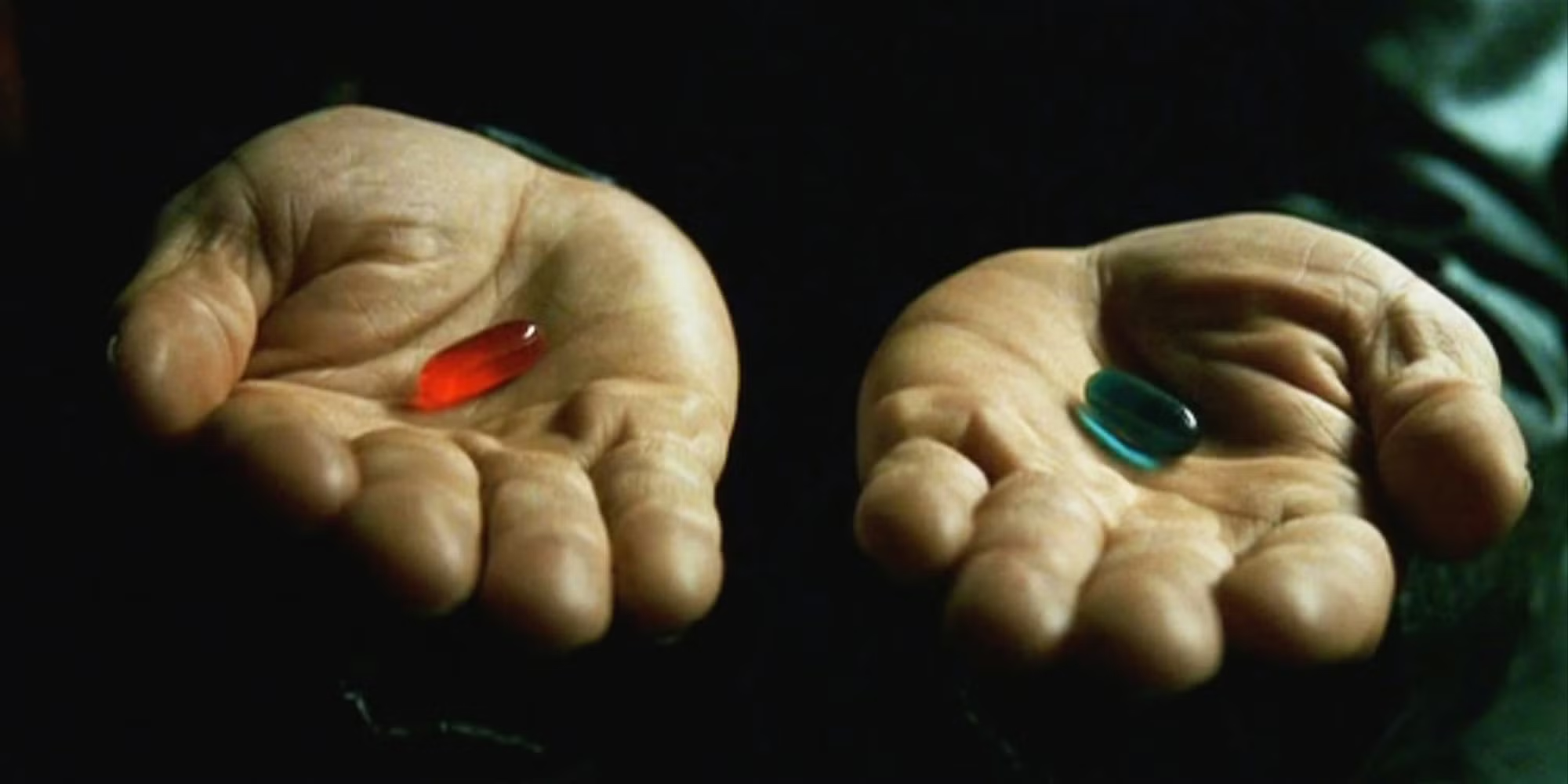

You’ve seen the truth.

- The blue pill: stay comfortable and let algorithms run your life, your data, your choices, your freedom, all traded away for convenience.

- The red pill: wake up, reclaim your privacy, and decide your own future.

Which pill do you choose?